Davos 2024: Technology in a Turbulent World

ポッドキャスト・トランスクリプト

This transcript has been generated using speech recognition software and may contain errors. Please check its accuracy against the audio.

Fareed Zakaria, Host, GPS, CNN: We are going to try to solve the problem of AI in this panel. We have 45 minutes. Henry Kissinger used to say, people who need no introduction crave it the most, so I'm going to abandon that rule. I think nobody here needs an introduction. You know who they all are and I'm going to assume none of them particularly care.

Let me start with you, Sam. I think most people are worried about two kind of opposite things about AI. One is it's going to end humankind as we know it, and the other is why can't it drive my car?

Where do you think realistically we are with artificial intelligence right now? For you, what are the things it can do most effectively? And what are the things we need to understand that it cannot do?

Sam Altman, Chief Executive Officer, OpenAI OpCo, LLC: I think a very good sign about this new tool is that even with its very limited current capability and its very deep flaws, people are finding ways to use it for great productivity gains or other gains and understand the limitations.

So, a system that is sometimes right, sometimes creative and often totally wrong, actually don't want that to drive your car, but you're happy for it to help you brainstorm what to write about or help you with code that you get to check.

Fareed Zakaria, GPS, CNN: Help us understand why can't it drive my car.

Sam Altman, OpenAI OpCo, LLC: Well there are great self-driving car systems but like at this point, you know, Waymo is around San Francisco and there are a lot of them and people love them. What I meant is like the sort of OpenAI-style of model, which is good at some things but not good at sort of a life-and-death situation.

But, I think people understand tools and the limitations of tools more than we often give them credit for. And people have found ways to make ChatGPT super useful to them and understand what not to use it for, for the most part.

So I think it's a very good sign that even at these systems' current extremely limited capability levels, you know, much worse than what we'll have this year, to say nothing of what we'll have next year, lots of people have found ways to get value out of them and also to understand their limitations.

So, you know, I think AI has been somewhat demystified, because people really use it now and that's, I think always the best way to pull the world forward with a new technology.

Fareed Zakaria, GPS, CNN: The thing that I think people worry about, is the ability to trust AI. At what level can you say, I'm really okay with the AI doing it, you know, whether it's driving the car, writing the paper, filling out the medical form.

And part of that trust, I think always comes when you understand how it works. And one of the problems AI researchers have, AI engineers have, is figuring out why it does what it does, how the neural network operates, what weights it assigns to various things.

Do you think that we will get there or is it getting so inherently complicated that we are at some level just going to have to trust the black box?

Sam Altman, OpenAI OpCo, LLC: So on the first part, of your question, I think humans are pretty forgiving of other humans making mistakes, but not really at all forgiving of computers making mistakes.

And so people who say things like, well, you know, self-driving cars are already safer than human-driven cars - it probably has to be safer by a factor of, I would guess like, between 10 and 100 before people will accept it, maybe even more.

The hardest part is when it's right 99.99% of the time, and you let your guard down.

”And I think the same thing is gonna happen for other AI systems, caveated by the fact that if people know, if people are accustomed to using a tool and know it may be totally wrong, that's kind of okay. I think, you know, in some sense, the hardest part is when it's right 99.99% of the time, and you let your guard down.

I also think that what it means to verify or understand what's going on is going to be a little bit different than people think right now.

I actually can't look in your brain and look at the 100 trillion synapses and try to understand what's happening in each one and say, okay, I really understand why he's thinking what he's thinking, you're not a black box to me.

But, what I can ask you to do is: explain to me your reasoning. I can say: you think this thing why? And you can explain first this, then this, then there's this conclusion, then that one and then there's this. And I can decide if that sounds reasonable to me or not.

And I think our AI systems will also be able to do the same thing. They'll be able to explain to us in natural language, the steps from A to B, and we can decide whether we think those are good steps, even if we're not looking into it and saying, okay I see each connection here.

I think we'll be able to do more to X-ray the brain of an AI than to X-ray the brain of you and understand what those connections are, but at the level that you or I will have to sort of decide, do we agree with this conclusion? We'll make that determination the same way we'd ask each other: explain to me your reasoning.

Fareed Zakaria, GPS, CNN: One of the things, you and I talked earlier, and one of the things you've always emphasized was that you thought AI can be very friendly, very benign, very empathetic, and I want to hear from you what you think, what do you think is left for a human being to do if the AI can out-analyze a human being, can out-calculate a human being. A lot of people then say, well, that means what we will be left with, our core innate humaneness, will be our emotional intelligence, our empathy, our ability to care for others. But do you think AI could do that better than us as well? And if so, what's the core competence of human beings?

Sam Altman, OpenAI OpCo, LLC: I think there will be a lot of things. Humans really care about what other humans think, that seems very deeply wired into us.

So chess was one of the first like victims of AI right? Deep Blue could beat Kasparov whenever that was, a long time ago, and all of the commentators said this is the end of chess. Now that a computer can beat the human you know, no one's going to bother to watch chess again or play chess again.

Chess is, I think, never been more popular than it is right now. And if you like, cheat with AI, that's a big deal. No one, or almost no one, watches two AIs play each other. We're very interested in what humans do.

When I read a book that I love, the first thing I do when I finish is I want to know everything about the author's life and I want to feel some connection to the person that made this thing that resonated with me.

And, you know, that's the same thing for many other products. Humans know what other humans want very well. Humans are also very interested in other people. I think humans are going to have better tools. We've had better tools before, but we're still like very focused on each other and I think we will do things with better tools.

And I admit it does feel different this time. General purpose cognition feels so close to what we all treasure about humanity, that it does feel different.

So, of course, you know, there'll be kind of the human roles where you want another human, but even without that, when I think about my job, I'm certainly not a great AI researcher. My role is to figure out what we're going to do, think about that and then work with other people to coordinate and make it happen.

And I think everyone's job will look a little bit more like that. We will all operate at a little bit higher of a level of abstraction. We will all have access to a lot more capability and will still make decisions. They may trend more towards curation over time, but we'll make decisions about what should happen in the world.

Fareed Zakaria, Host, GPS, CNN: Mark, let me bring you in as the other technologist here. What do you think of this question of what Sam seems to be saying is that, it is those emotional, teamwork kinds of qualities that become very important.

You have this massive organization, you use massive amounts of technology. What do you think human beings are going to be best at in a world of AI?

Marc Benioff, Chair and Chief Executive Officer, Salesforce Inc: Well, I don't know, when do you think we're going to have our first WEF panel moderated by an AI?

You know, I've been sitting on this stage for a lot of years, I'm always looking down at someone. Got a great moderator right here with you, but maybe it's not that far away. Maybe pretty soon, in a couple of years, we're going to have a WEF digital moderator sitting in that chair moderating this panel and maybe doing a pretty good job because it's going to have access to a lot of the information that we have.

It's going to evoke the question of are we going to trust it. I think that trust kind of comes up the hierarchy pretty darn quick. You know, we're gonna have digital doctors, digital people, these digital people are going to emerge and there's going to have to be a level of trust.

Now today, when we look at the AI, we look at the gorgeous work that Sam has done, and so many of the companies that are here that we've met with like Cohere and Mistral and Anthropic and all the other model companies that are doing great things. But we all know that there's still this issue out there called hallucinations. And hallucinations is interesting, because it's really about those models, they're fun, we're talking to them, and then they lie. And then you're like, whoa, that isn't exactly true.

I was at a dinner last night, we were having this great dinner with some friends and we were asking the AI about one of my dinner guests and the AI said this person is on the board of this hospital. And she turned to me, she goes, no, I'm not. And we've all had that experience, haven't we?

We have to cross that bridge. We have to cross the bridge of trust. It's why we went to this UK Safety Summit. We all kind of piled in there a couple of months ago and it was really interesting because it was the first time that technology leaders kind of showed up and every government technology minister from every country, it was amazing actually, I've never really seen anything like it.

But everyone's there because we realized we are at this threshold moment. We're not totally there yet. We're at a moment, there's no question because we're all using Sam's product and other products and going wow, we're having this incredible experience with an AI. We really have not quite had this kind of interactivity before. But we don't trust it quite yet.

So we have to cross trust. We have to also turn to those regulators and hey, if you look at social media over the last decade, it's been kind of a fucking shit show. It's pretty bad. We don't want that in our AI industry. We want to have a good healthy partnership with these moderators and with these regulators.

I think that begins the power of kind of where we're going. And when I talk to our customers about what they want, they don't really know exactly what they want. I mean, they know they want more margin, you know, Julie will tell you that. They want more productivity, they want better customer relationships.

But, at the end of the day, you know, they're going to turn to these AIs and are they going to replace their employees or are they going to augment their employees? And today, the AI is really not at a point of replacing human beings. It's really at a point where we're augmenting them.

So I would probably not be surprised if you used AI to kind of get ready for this panel and ask ChatGPT some really good questions. Hey, what's some good questions I could ask Sam Altman on the state of AI? It made you a little better, it augmented you.

You know, my radiologist is using AI to help read my CT scan into my MRI and this type of thing. We're just about to get to that breakthrough where we're going go wow, it's almost like it's a digital person. And when we get to that point, we're going to ask ourselves, do we trust it?

Fareed Zakaria, Host, GPS, CNN: All right. For the record, I did not use ChatGPT to prepare for this panel.

Julie, people talk about AI at a level of abstraction, but I feel like people are trying to understand, how does it really improve productivity exactly, you know, the question Marc was posing. You run a vast organisation. I mean, you just told me you employ 330,000 people in India alone. Do you have any sense already of how you are implementing AI and what it's doing?

Julie Sweet, Chair and Chief Executive Officer, Accenture: Yet, let me be practical for a moment. I'm old enough that I remember when I was working at my law firm and email was introduced. And I remember, because I was a young lawyer, you had to stand by the fax machine yourself to fax anything to send to a client. So email came and we thought this is amazing. And the heads of my law firm said, well, email is great, but you cannot attach a document and send it to a client because it's not safe. Now think about that.

A little bit of what's happening today when we have these abstract conversations, our employees actually want, in many cases, to use this technology and they're going to start pulling. For example, if you do field sales in consumer goods, you spend most of your time trying to figure out what was the customer, you know, do we have a delivery issue because that's a different department and all that.

Today, with the technology today, with all the appropriate caveats you can very accurately now on your phone be told which customer to go to, whether they had a delivery problem, generate something personalized and then spend most of your time talking to your customer, which is how you really grow.

So when we think about AI, of course there are different risks. The technology is going to change, as Sam said, and it's all about knowing what is it good for now? How can you implement it with the right safeguards? As a CEO, I have someone I can call in my company, they can tell me where AI is used, what the risks are, how we manage them, and, therefore we implement it.

And so we're implementing it broadly in the areas where it's ready now, but I think we have to be careful that we don't ... you know, you started this panel by saying we're going to solve the problem of AI and the immediate thing I said is, I hope we're going to figure out how to use AI, because it's a huge opportunity. And I'm sure Albert's going to talk about what it's going to do for science, but it's also doing a lot of great things for our people who don't want to spend their time reading and trying to figure out things and would love to spend their time with clients, with customers.

So the single biggest difference between those who will use it successfully or not, I believe, are leaders educating themselves, so they're not the ones that told me I couldn't email a document. But you cannot do that if you do not spend the time to learn the technology and then apply it in a responsible way.

Fareed Zakaria, Host, GPS, CNN: So if you were to try and improve productivity at Accenture wouldn't one way be to use AI to have fewer people do what they do now? In other words, you know, you have some large department that sends out forms or things, something like that, the AI would much more efficiently be able to do it. So you need half as many people. Is that likely?

Julie Sweet, Accenture: So just remember, GenAI is the latest AI, I've been doing that for a decade, I used to have 1,000s of people who manually tested computer systems. That essentially went away in 2015.

So when my investors ask me, when are you going to break the difference between people and technology? I'm saying, I've been doing that every year. I literally serve clients and promise that every year I'm going find 10% more productivity, before GenAI. This is not new, right? This is more powerful than prior versions of technology, it's more accessible. And you didn't hear things like 'responsible PC'. Okay, that wasn't a concept, right? So, because it's more powerful, we have to think differently.

In 2019, we had 500,000 people, we have 740,000 now, we introduced technology training for everyone, whether you worked in the mailroom, we still have one, in HR or with our clients, and they had to learn BASIC and pass assessments, AI, cloud, data.

Today in the next six months we will train 250,000 people on GenAI and responsibility. This is basic digital literacy, to run a company and to be good.

And by the way, public service - massively going to improve social services by bringing this powerful technology.

So first and foremost we have to learn what it is, so we can talk about it in a balanced way and then absolutely, there are capabilities that don't exist today in most companies, like responsible AI, where if someone uses AI at Accenture it's automatically routed, it's assessed for risk and then mitigations are pointed in and I can call someone and I know exactly where AI is used. That will be ubiquitous in 12 to 24 months across responsible companies.

Fareed Zakaria, Host, GPS, CNN: Albert, the other big revolution that we've been hearing about for the last few years was the revolution in biology. The sequencing of the genome, the ability to gene edit. What does AI do to your field in general, but particularly are those two revolutions now interacting, the AI revolution and the biotech revolution?

I truly believe that we are about to enter a scientific renaissance in life sciences, because of this coexistence of advancements in technology and biology.

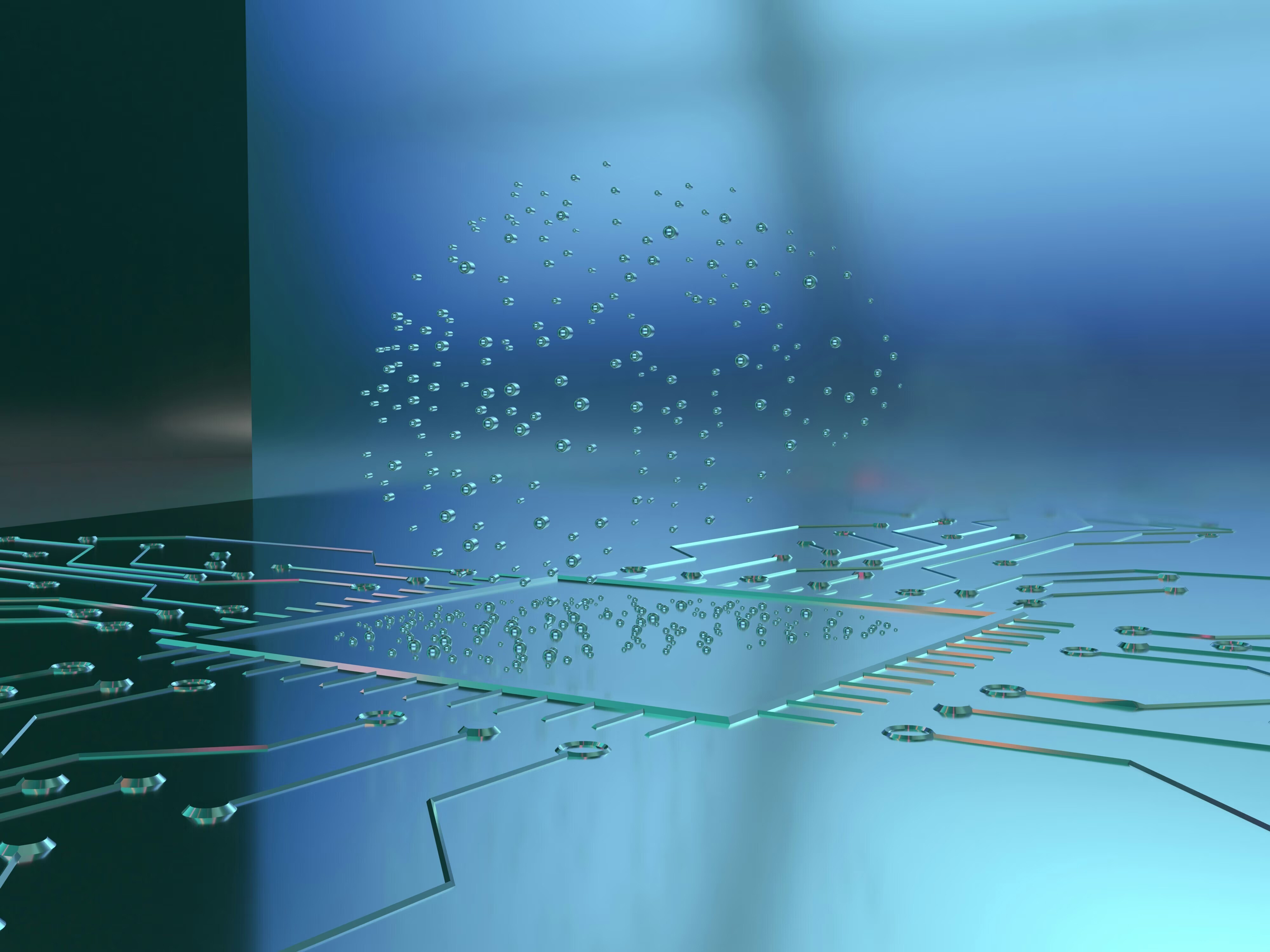

”Albert Bourla, Chief Executive Officer, Pfizer, Inc.: The tech revolution is transforming right now what do we do? Our job is to make breakthroughs that save patients' lives. With AI I can do it faster and do it better. And this is not only because of the advancements in biology, as we spoke, but also the advancements in technology and the collision between the two of them. But they are creating tremendous effects that will allow us to do things that we're not able to do until now.

I truly believe that we are about to enter a scientific renaissance in life sciences, because of this coexistence of advancements in technology and biology.

Fareed Zakaria, Host, GPS, CNN: Give us some sense, give us a few examples or you know, help us understand how these two technologies, these two revolutions interact.

Albert Bourla, Chief Executive Officer, Pfizer, Inc.: Generative AI is something that we were all impressed with when we saw it now let's say basically last year, right? But AI, in different forms, has existed for many, many years and we are using it very, very intensively in our labs.

The best example that I think will resonate, is the oral pill for COVID was developed, the chemist part of it was developed in four months, it usually takes four years. This is because in the typical processes, what we call drug discovery, you synthesise millions of molecules and then you try to discover within them which one works.

With AI now we're moving to drug design, instead of drug discovery, so instead of making 3 million molecules, we make 600 and we make them by using tremendous computational power and algorithms that help us to design the most likely molecules that will be successful and then we look to find the best among them.

Four years to four months. Millions of lives were saved because of that.

Fareed Zakaria, Host, GPS, CNN: Mr. Chancellor, you're a politician. The issue that Sam raised about trust, that Marc Benioff raised about trust, does seem central. How do you get people to trust AI? Should they trust AI and should government regulate AI so that it is trustworthy?

Jeremy Hunt, Chancellor of the Exchequer, HM Treasury of the United Kingdom: I think we need to be light touch. Because this is it's such an emerging stage, you can kill the golden goose before it has a chance to grow.

I remember the first time I went on ChatGPT, I said is Jeremy Hunt a good Chancellor of the Exchequer? The answer came back: Jeremy Hunt is not Chancellor of the Exchequer. So I said yes he is. And is he a good one? And the reply came back, I'm sorry, we haven't lived up to your expectations, but Jeremy Hunt is not Chancellor of the Exchequer. So there's a kind of certainty about the responses you get, which is often not justified.

But I think we can all do tremendously well out of AI. The UK is already doing well. London is the second largest hub for AI after San Francisco and the UK has just become the world's third largest tech economy, a trillion-dollar tech economy, after the United States and China.

But, as a politician, I look at the big problems that we face. For example, when we have the next pandemic, we don't want to have to wait a year before we get the vaccine. And if AI can shrink the time it takes to get that vaccine to a month, then that is a massive step forward for humanity.

At the moment in the UK, and I think most of the developed world, voters are very angry about their levels of tax. If AI can transform the way our public services are delivered and lead to more productive public services with lower tax levels, that is a very big win.

But I think we have to allow the technology to grow. We have to have our eyes open to the guardrails that we're going to need.

The UK also plays a very big role in global security. We need to be sure, as I was saying to Sam earlier this morning, that a rogue actor isn't going to be able to use AI to build nuclear weapons.

So we need to have our eyes open, which is why the AI Safety Summit that Rishi Sunak organized at the end of last year was so important.

But we need to do it in a light touch way because we have just got to be a bit humble. There's so much that we don't know and we need to understand the potential where this is going to lead us, at a stage where no one really can answer that question.

Fareed Zakaria, Host, GPS, CNN: I feel Albert, that this is an area where regulation, where people are going to be most worried about the issue of trust, which is the combination of AI and medical. And is this you know, is it safe for me to listen to this doctor, to take this drug that has been developed in a computer somewhere? Is there a way to alleviate that?

Albert Bourla, Pfizer, Inc.: I think there is. First of all, we need to understand that AI is a very powerful tool. So in the hands of bad people, it can do bad things for the world, but in the hands of good people, it can do great things for the world.

And I'm certain right now that the benefits clearly outweigh the risks, but I think we need regulations. Right now, there is a lot of debate about how those regulations will set guardrails and there are some countries that are more focused on how to protect against the bad players. There are some countries that are more focused on how to enable scientists to do all the great things with this too that we want the world to have, as in the next pandemic.

I think we need to find the right balance that will protect and at the same time enable the world to move on.

Fareed Zakaria, Host, GPS, CNN: Sam, when I look at technology, my fear is often what will bad people do with this technology. But there are many people who fear this much larger issue of the technology ruling over us right?

You've always taken a benign view of AI or a relatively benign view, but people like Elon Musk and sometimes Bill Gates and other very smart people who know a lot about the field are very, very worried.

Why is it that you think they're wrong? What is it that they are not understanding about AI?

Sam Altman, OpenAI OpCo, LLC: Well, I don't think they're guaranteed to be wrong. I mean, I think there's a spirit, there's a part of it that's right, which is this is a technology that is clearly very powerful and that we cannot say with certainty exactly what's going to happen.

And that's the case with all new major technological revolutions.

But it's easy to imagine with this one that it's going to have like massive effects on the world and that it could go very wrong.

The technological direction that we've been trying to push it in, is one that we think we can make safe, and that includes a lot of things.

We believe in iterative deployment, so we put this technology out into the world along the way, so people get used to it so we have time as a society or institutions have time to have these discussions, to figure out how to regulate it, how to put some guardrails in place.

Fareed Zakaria, Host, GPS, CNN: Can you technically put guardrails in and write a kind of constitution for an AI system, would that work?

Sam Altman, OpenAI OpCo, LLC: If you look at the progress from GPT3 to GPT4, about how well it can align itself to a set of values, we've made massive progress there.

Now, there's a harder question than the technical one, which is who gets to decide what those values are? And what the defaults are, what the bounds are, how does it work in this country versus that country? What am I allowed to do with it, versus not?

It's good that people are afraid of the downsides of this technology. I think it's good that we're talking about it. I think it's good that we, and others, are being held to a high standard.

”So, that's a big societal question, you know, one of the biggest.

But from the technological approach there, I think there's room for optimism, although the alignment techniques we have now I don't think will scale all the way to much more powerful systems, we're going to need to invent new things.

So I think it's good that people are afraid of the downsides of this technology. I think it's good that we're talking about it. I think it's good that we, and others, are being held to a high standard.

And, you know, we can draw on a lot of lessons from the past about how technology has been made to be safe and also how the different stakeholders in society have handled their negotiations about what safe means and what safe enough is.

But I have a lot of empathy for the general nervousness and discomfort of the world towards companies like us, and you know, the other people doing similar things, which is like, why is our future in their hands? And why are they doing this? Why did they get to do this?

And I think it is on us. I mean, I believe, and I think the world now believes, that the benefit here is so tremendous that we should go do this. But I think it is on us to figure out a way to get the input from society about how we're going to make these decisions, not only about what the values of the system are, but what the safety thresholds are, and what kind of global coordination we need to ensure that stuff that happens in one country does not super negatively impact another - to show that picture.

So I think not having caution, not feeling the gravity of what the potential stakes are would be very bad. So, I like that people are nervous about it. We have our own nervousness, but we believe that we can manage through it and the only way to do that is to put the technology in the hands of people and let society and the technology coevolve and sort of step by step, with a very tight feedback loop and course correction, build these systems that deliver tremendous value while meeting the safety requirements.

Fareed Zakaria, Host, GPS, CNN: Marc, what do you think about this whole question?

Marc Benioff, Salesforce, Inc: Well, I think we've all seen the movies. You know, we saw the movies, we saw HAL, we saw Minority Report and we saw War Games and our imaginations are filled with what happens when we have an AI that's going well and an AI that's going wrong.

And you know, we're moving into a fantastical new world. And I love it when Sam always says, he has this sign above his desk that says, I don't know what's going to happen next exactly, because we don't completely know exactly what is going to happen.

Sam Altman, OpenAI OpCo, LLC: It actually says no one knows what happens next, which I think is an important difference.

Marc Benioff, Salesforce, Inc: Thank you for that correction - hopefully, the spirit of what I was saying was correct.

So you know, our customers are coming to us all the time and they're saying, hey, we want to use this. And our customers, what do our customers want? They want more margin. They want more productivity, they want better customer relationships and they want AI to give that to them.

And so I just got back from Milan and I was down with Gucci. And they got 300 call centre operators down there and they're using our service cloud and they want to use Einstein, which is our AI platform. It'll do a trillion predictive and generative transactions this week. We're partnered with Sam and it's very exciting. It has a trust layer that lets our customers feel comfortable using their product.

And I say to them, what do you really want? And I'm not sure what they want. Are they looking to replace people? Are they looking to add people? To get some kind of value? You know, it's an experimental time for a lot of enterprises when it comes to AI, so we put it in there.

It's now a test that's been going on six, nine months amazing. And something incredible happened. This call centre which was doing like you know, you buy something at Gucci and then it needs repair, you got to have it fixed or replaced or whatever. You call these folks and they're talking to you.

And I walked in and they said this is amazing what is happening. I said what happened? Revenues up 30%. Revenues are up 30%? Yeah. How did that happen? Well, these were all just service professionals, but they now have this generative AI, and predictive as well, they've all been augmented. The service professionals, and this is what they told me, are now also sales professionals and marketing professionals. They're selling products, not just servicing them. They're adding value to the customers and it's a miraculous thing. Their morale went way up. They can't believe what they've been able to achieve.

They didn't even know what they didn't know about the products. It all was kind of being truly tutored and mentored and inspired in them by the AI. And the idea that Einstein could augment them and then, yes, they got their revenue. They got their margin that they so badly wanted.

Even the WEF you know, this app that you're all using is running on Einstein.

So those predictions that you're getting, hey, because you like this AI panel, you should try this WEF panel, and you may look over here, that is our Einstein platform, giving you those ideas.

So this is really the power. But for our customers - and this is a little different between you know, my good friend Sam and my company - your data is not our product. We're not going to take your data, we're not going to use your data. We do not train on your data. We have a separation. We have a trust layer. We never take or use our customers' data into our AI. So your data is not our product. That's very important.

Our core value is also trust, trust and customer success and innovation and equality and sustainability. Our core values have to be represented in how we're building these products for our customers. That is really critical for our teams to understand.

But, back to your comment and Sam's comment, you know, look, this is a big moment for AI. AI took a huge leap forward in the last year, two years.

Right, exactly what Sam said, between one and two and three and then four, and then I'm sure five, six and seven are coming.

And here's the thing. It could go really wrong, which we don't want. We just want to make sure that people don't get hurt. We don't want something to go really wrong. That's why we're going to that Safety Summit. That's why we're talking about trust. We don't want to have a Hiroshima moment. You know, we've seen technology go really wrong and we saw Hiroshima.

We don't want to see an AI Hiroshima. We want to make sure that we've got our head around this now. And that's why I think these conversations and this governance and getting clear about what our core values are is so important.

And yes, our customers are going to get more margin. Those CEOs are going to be so happy, but, at the end of the day, we have to do it with the right values.

Fareed Zakaria, Host, GPS, CNN: Marc brought up the issue of data and data usage. So Sam, I have to ask you, the New York Times is suing you and claims that, the gist of what The Times is saying, is that open AI and other AI companies as well, uses New York Times articles as an input that allows it to make the language predictions that it makes and it does so excessively and properly and without compensating the New York Times.

Isn't it true that at the end of the day, every AI model is using all this data that is in the public domain and shouldn't the people who wrote that data, whether it's newspapers or comedians who've written jokes, shouldn't they all get compensated?

Sam Altman, OpenAI OpCo, LLC: Many many thoughts about that.

I'll start with the difference between training and what we display when a user sends a query.

By the way with The New York Times, as I had understood it, we were in productive negotiations with them. We wanted to pay The New York Times a lot of money to display their content. We were surprised as anybody else to read that they were suing us in The New York Times. That was sort of a strange thing.

But we were, we are open to training on The New York Times, but it's not our priority. We actually don't need to train on their data. I think this is something that people don't understand, any one particular training source doesn't move the needle for us that much.

What we want to do with content owners, like The New York Times and like deals that we have done with many other publishers and will do more over time, is when a user says hey, ChatGpt, what happened at Davos today? We would like to display content, link out, show brands of places like The New York Times or The Wall Street Journal or any other great publication and say, here's what happened today. Here's this real-time information and then we'd like to pay for that, we'd like to drive traffic for that. But it's displaying that information when the user queries, not using it to train the model.

Now we could also train the model on it, but it's not our priority. We're happy not to.

Fareed Zakaria, Host, GPS, CNN: You're happy not to with any specific one, but if you don't train on any data you don't have any facts to train the data on.

Sam Altman, OpenAI OpCo, LLC: I was going to get there to the next point.

One thing that I expect to start changing, is these models will be able to take smaller amounts of higher-quality data during their training process and think harder about it and learn more.

You don't need to read 2,000 biology textbooks to understand, you know, high-school level biology, maybe you need to read one, maybe three, but that 2,001st is certainly not going to help you much. And as our models begin to work more that way, we won't need the same massive amounts of training data.

But what we want, in any case, is to find new economic models that work for the whole world, including content owners, and although I think it's clear that if you read a textbook about physics, you get to go do physics later with what you learned and that's kind of considered okay, if we're going to teach someone else physics using your textbook and using your lesson plans, we'd like to find a way for you to get paid for that.

If you teach our models, if you help provide the human feedback. I'd love to find new models for you to get paid based on the success of that.

So, I think there's a great need for new economic models. I think the current conversation is focused a little bit at the wrong level. And I think what it means to train these models is going to change a lot in the next few years.

Fareed Zakaria, Host, GPS, CNN: Jeremy, you said in an interview, that you thought it was very important that the United States and Britain and countries like that win the AI war versus China. Explain what you mean and why you think it's important.

Jeremy Hunt, HM Treasury of the United Kingdom: I think that probably mischaracterizes the gist of what I was trying to say. I think that when it comes to setting global AI standards, it's very important that they reflect liberal democratic values.

But I think it is really important that we talk to countries like China, because in the end, I mean, I think one of the most interesting things about this morning's discussion is that Sam has a sign saying no one knows the future, but we do have agency over the future.

And that is the tension between the two and I think that we are incredibly lucky that people like Sam are helping to transform humanity's prospects for the future. I don't think anyone in this room thinks the world would be a better place if there wasn't AI.

But we have choices now. And the choice we need to make is how to harness it so that it is a force for good. I actually think that means talking to countries like China, because one of the ways it will be a force for bad is if it just became a tool in a new geostrategic superpower race, with much of the energy put into weapons, rather than things that could actually transform our daily lives. And those are choices we make. And one of the ways that you avoid that happening is by having a dialogue with countries like China over common ground,

But I think we should, whilst being humble about not being able to predict the future, remember that we do have control over the laws, the regulations, we have the ability to shape this journey. And I think we should also look at history and say, you know, look at the Industrial Revolution, the computer revolution, where those revolutions succeeded was where the benefits were spread evenly throughout society and not concentrated in small groups.

In the case of AI, I would say the challenge is to make sure the benefits are spread throughout the world, North and South, developing world and developed world, and not just concentrated in advanced economies. Because otherwise, that will deepen some of the fractures that are already, in my view, taking us in the wrong direction.

Fareed Zakaria, Host, GPS, CNN: Julie, what do you think about this, because Accenture works all over the world. Do you think that this has the potential to create a kind of new arms race in technology?

Julie Sweet, Accenture: Being practical, one would hope, given we've all learned the lessons of having different regulations in data privacy, that we would spend as much time thinking, can we find common ground and use this to work with some of the places where we are having these, around something that is objectively necessary, if you want to have any kind of globalization to say, let's have common standards.

So the optimist side of me says, let's use this as a way of collaboration, taking out some of the geopolitics of it, because there is really good sense in it.

I'm reminded in this conversation, we have a leadership essential at Accenture that I look at, try to look at, every day. It says: lead with excellence, confidence and humility.

And Jeremy, you just talked about being humble. I think one of the most important things we all have to do as leaders and countries is to have a good sense of humility around this. Make sure we're talking to each other. This is why Davos is so important. It's why the work we just did, together with KPMG and PwC and the WEF on trust and digital trust and learning and reading, I think there's a lot we have to do to educate ourselves and then try to start to think differently.

Fareed Zakaria, Host, GPS, CNN: Albert for you, how do you think China will regulate medicine in a different way on some of these issues. We've had the issue come up with gene editing, where there was a famous case of a Chinese doctor who decided to try to do gene editing to prevent AIDS and the Chinese government actually jailed him because of a global convention. Could you imagine something like that happening with AI?

Albert Bourla, Pfizer, Inc.: I don't know what can happen with AI. As Sam said, nobody knows. And I don't know how China eventually will think about those things.

What I know, is that in life sciences, China is making tremendous progress. Right now, I think there are even more biotechs in China than exist in the US or in the UK or in Europe. I think that the Chinese government is committed to develop basic science in life science and I think in a few years we'll start seeing the first new molecular entities coming from China and not from the US.

Fareed Zakaria, Host, GPS, CNN: I'm going to close by...

Albert Bourla, Pfizer, Inc.: Or let me put it this way: not only from the US.

Fareed Zakaria, Host, GPS, CNN: I'm going to close by asking a question slightly unrelated to all this, but Sam, you were involved in what is perhaps the most widely publicised boardroom scandal in recent decades. What lesson did you learn from that? Other than trust Satya Nadella.

Julie Sweet, Accenture: Sam, at least he's asking you when you only have 42 seconds left!

Sam Altman, OpenAI OpCo, LLC: A lot of things.

Trying to think what I can say.

At some point, you just have to laugh, like at some point, it just gets so ridiculous.

But I think, I mean, I could point to all the obvious lessons that, you don't want to leave important but not urgent problems out there hanging and you know, we had known that our board had gotten too small and we knew that we didn't have the level of experience we needed. But last year was such a wild year for us in so many ways that we sort of just neglected it.

I think one more important thing though is, as the world gets closer to AGI, the stakes, the stress, the level of tension, that's all going to go up. And for us, this was a microcosm of it, but probably not the most stressful experience we ever face.

And one thing that I've sort of observed for a while, is every one step we take closer to very powerful AI, everybody's character gets like, plus 10 crazy points. It's a very stressful thing and it should be because we're trying to be responsible about very high stakes.

And so I think one lesson is, as we get, we the whole world, gets closer to very powerful AI, I expect more strange things. And having a higher level of preparation, more resilience, more time spent thinking about all of the strange ways things can go wrong, that's really important.

The best thing I learned throughout this, by far, was about the strength of our team.

When the board first asked me, like the day after firing me, if I wanted to talk about coming back, my immediate response was no because I was just very pissed about a lot of things about it.

And then you know, I quickly kind of like got to my senses and I realized that I didn't want to see all the value get destroyed and all these wonderful people who put their lives into this and all of our customers. But I did also know, and I had seen it from watching the executive team, and really the whole company do stuff in that period of time, the company would be fine without me. The team, either the people that I hired or how I mentored them or whatever you want to call it, they were ready to do it.

And that was such a satisfying thing, both personally about whatever I had done, but like knowing that we had built, we, all of us, the whole team, had built this like unbelievably high functioning and tight organisation, that was my best learning of the whole thing.

Fareed Zakaria, Host, GPS, CNN: Thank you all

As technology becomes increasingly intertwined in our daily lives and important for driving development and prosperity, questions of safety, human interaction and trust become critical to addressing both benefits and risks.

How can technology amplify our humanity?

This is the full audio from a session at the World Economic Forum’s Annual Meeting 2024

Speakers:

Sam Altman

Chief Executive Officer, OpenAI OpCo, LLC

Marc Benioff

Chair and Chief Executive Officer, Salesforce, Inc.

Julie Sweet

Chair and Chief Executive Officer, Accenture

Jeremy Hunt

Chancellor of the Exchequer, HM Treasury of the United Kingdom

Fareed Zakaria

Host, Fareed Zakaria GPS, CNN

Albert Bourla

Chief Executive Officer, Pfizer, Inc.

Check out all our podcasts on wef.ch/podcasts:

その他のエピソード:

「フォーラム・ストーリー」ニュースレター ウィークリー

世界の課題を読み解くインサイトと分析を、毎週配信。